June 11, 2004

Recording the Forces on Extreme Sports

June 8, 2004

Discussion of WiFi Tag/Beacons

In my opinion, the key interesting feature of this device is that it could be the authoritative source of data for something that you don't want to be available to the greater world. The data would have to quickly lose value as it got old because you are making it available locally and it would be easy to transmit once you had copy of it. The only thing that springs to mind that is like this are grocery store prices. It might be nice for a customer to know what the prices of everything in a store are when you walk into the store, but the grocery store doesn't want that information getting out because it would enable people to comparison shop without coming to the physical location. Someone who walked into the store could transmit that information to someone outside the store, but the prices would quickly grow stale and unreliable.

I am not overwhelmed by the impact of this class of data, though, so I don't think there is a lot of interesting research to be done here.

Learning Models of Human Behavior with Sequential Patterns

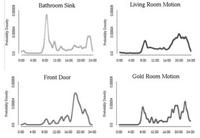

From this data, they applied some sequential pattern discovery algorithms to try and find sequences of sensor firings which were well supported by the data. The results were swamped with noise. The basically ended up finding support for many patterns. So many patterns in fact that the problem of interpreting the raw data became the problem of interpreting the patterns that they discovered. As a result they resorted to putting a number of heuristic filters into the system. The filters made sense but were unsatisfying from a design point of view. The treated events close in time as likely being from the same activity, they "manually" removed subpatterns which were subsumed by others and the wrote some filters to process individual sensors whose design didn't match well with their application.

I agree with their own critique of their work in which they say that they didn't do any sort of analysis to determine novelty of a pattern. Comparing what they saw to a background model would have caused the longer sequences to appear more unusual in a natural way without adding hand tuned rules.

I felt like both their sensors and their patterns lacked anything in the way of semantics. It was impossible to draw any conclusion about what was going on in the patterns, primarily because the sensors themselves lacked any type of semantics. It's hard to interpret a series of motion sensors firing even if it happens regularly.

They also had the real world problem of strange user routines, differing patterns on weekdays, pets messing up the data, sensors failing and vacations, which we, as researchers, tend to write off as anomalies, but are probably more likely the norm in a household.

I appreciated this paper because it helped to clarify what our anesthesiology work is not doing. In particular, we are not trying to pull signal out of a lot of noise. We are going to be doing more of a model merging operation than a model discovery operation. Our models are clearly delineated in time and have a semantics associated with them that is provided by the person who is recording the data stream.

This paper had some good data and seemed a little bit like computational biology work in which computers are put to the task of finding conserved sequences in genomes.