January 21, 2005

IJCAI update

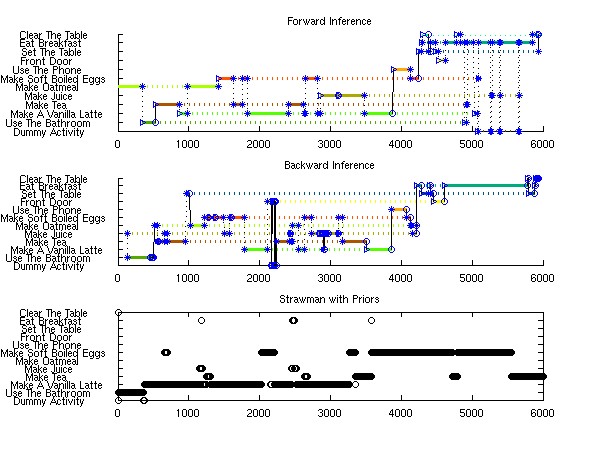

Today, I took some time out to make sure our straw man was going to do badly. The above graphs confirm this. The top graph is of forward particle filter inference with hand made models as priors and no training. Second is a graph of backward particle filter inference with hand made models as priors and no training. I've posted these graphs before. Third is a graph of exact DBN inference on a model with one state per activity and a fully connected state space. The third graph uses a sensor model which was trained on the labelled examples of runs when I performed single activities by themselves. All three graphs assume a uniform transition between activities. None of these graphs were trained on the full breakfast runs which would help them learn which activities follow eachother.

The conclusion I draw from this is that the simple solution does not look like it is going to do very well. The final word will come after I train it on the full breakfast runs. But this is a good result. The main problem with the third model is that it quickly switches to whichever activity has the highest probability of seeing the current observation. It doesn't happen immediately, but if the same object is seen in rapid succession, it overwhelms the tendency of the model to stay in the same activity.

Now on to learning with particle filters

Posted by djp3 at January 21, 2005 4:35 PM | TrackBack (0)