January 14, 2005

IJCAI update

Data collection is complete

11 activities total

23 training runs of the activities by themselves (2 each plus an extra)

10 full breakfast runs with all 11 activities completed and interleaved

2 incomplete breakfast runs with a subset of 11 activities completed and interleaved

A total of 60 tags were deployed. Two different types, the round dishwasher type and the flat sticker type.

The data collection was done with two hands and two hand readers

January 12, 2005

January 11, 2005

IJCAI update

|

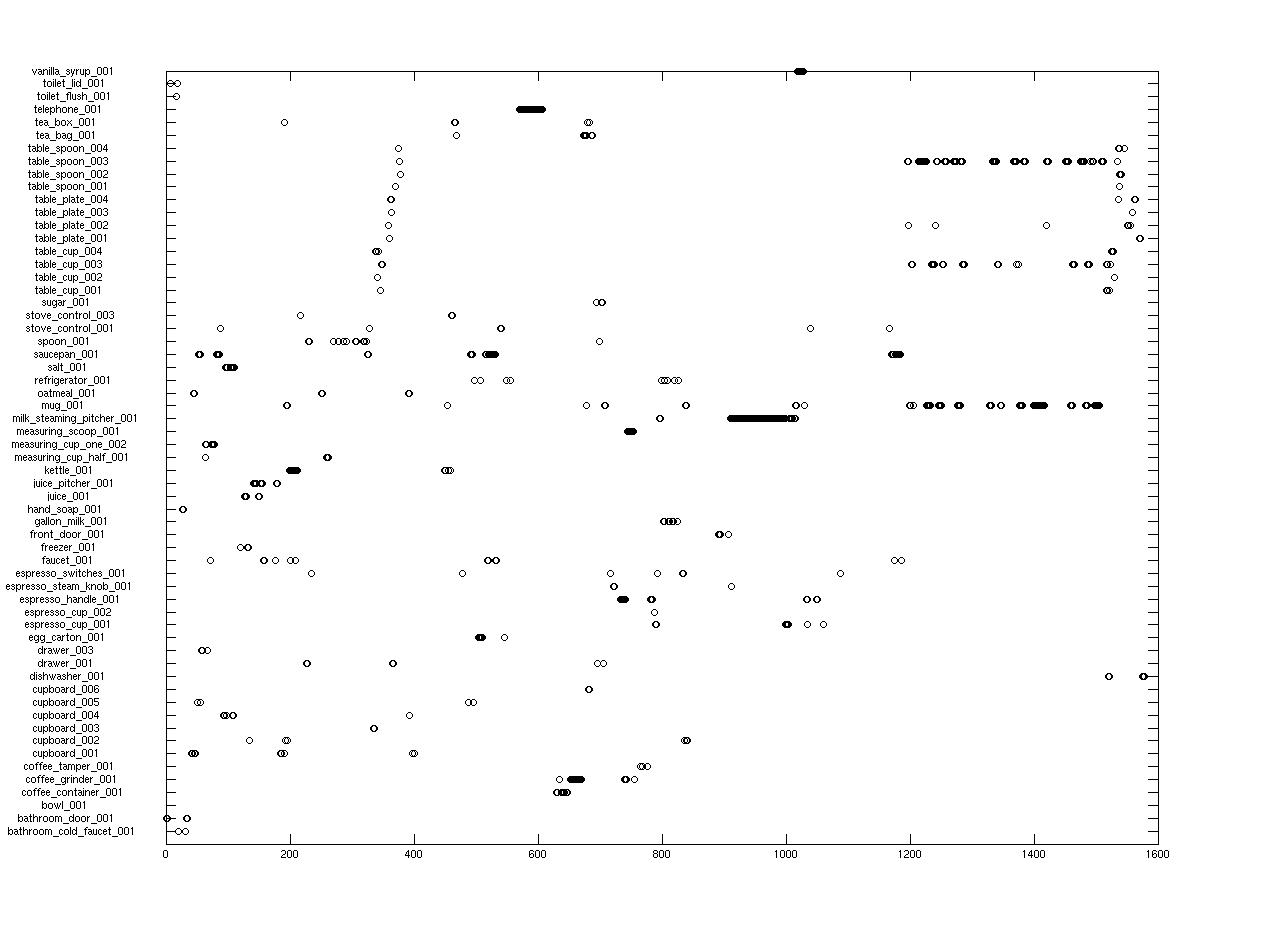

| Here is a viz of the data run from this morning. x axis is time in seconds. y axis is objects. A circle is an observation. Some features to note are the vertical stripe on the upper left...that is setting the table. The three horizontal lines on the upper right are eating... The dark swatch of hits in the middle is steaming the milk for espresso! |

January 9, 2005

IJCAI update

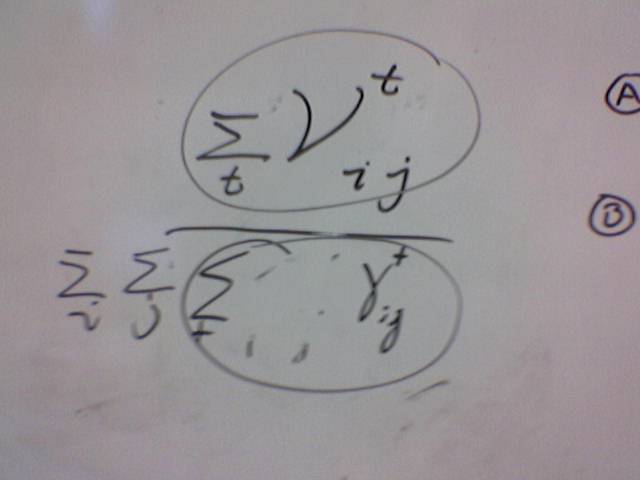

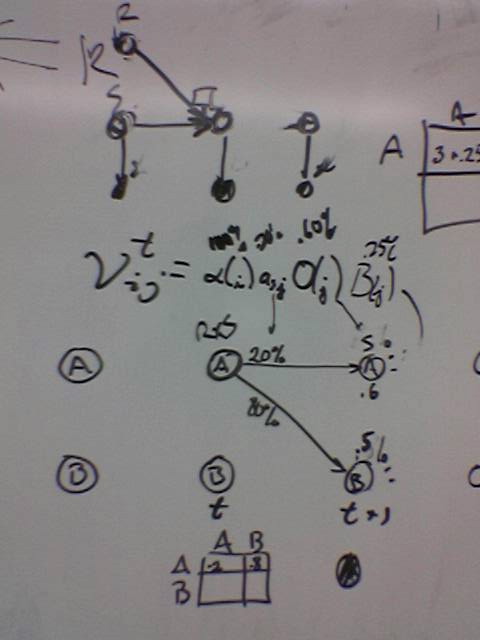

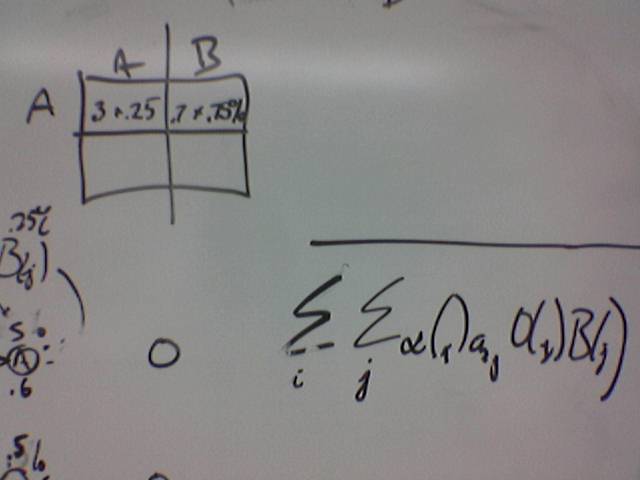

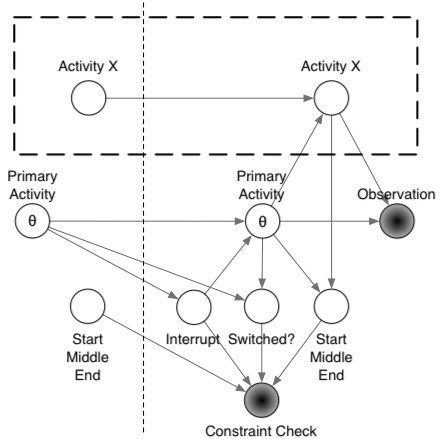

"Current model" |

|